Leveraging agentic LangGraph AI with Azure and Microsoft Graph

Project Overview

This proof-of-concept showcases how LangGraph powers AI-driven workflows equipped with short-term and long-term memory and extending AI capabilities through seamless integration with Microsoft 365. As a Cloud Architect, I was also curious how well it could integrate with Azure's enterprise-grade infrastructure.

The full source code for this article is available on GitHub here.

Key features:

-

👤 Persistent user profiles - The system learns your timezone, working hours, and preferences, then uses that context to provide better responses.

-

💾 Memory across sessions - Conversations and user data persist between sessions using dual storage short-term for threads, long-term for profiles.

-

💬 Natural language scheduling – Effortlessly arrange and access Outlook calendar events simply by conversing, without the need to navigate menus.

-

🏢 Enterprise-grade integration – Delivers security, scalability, and resilience by leveraging Azure infrastructure components including Azure AI Foundry, Azure Cosmos DB, and Azure Database for PostgreSQL.

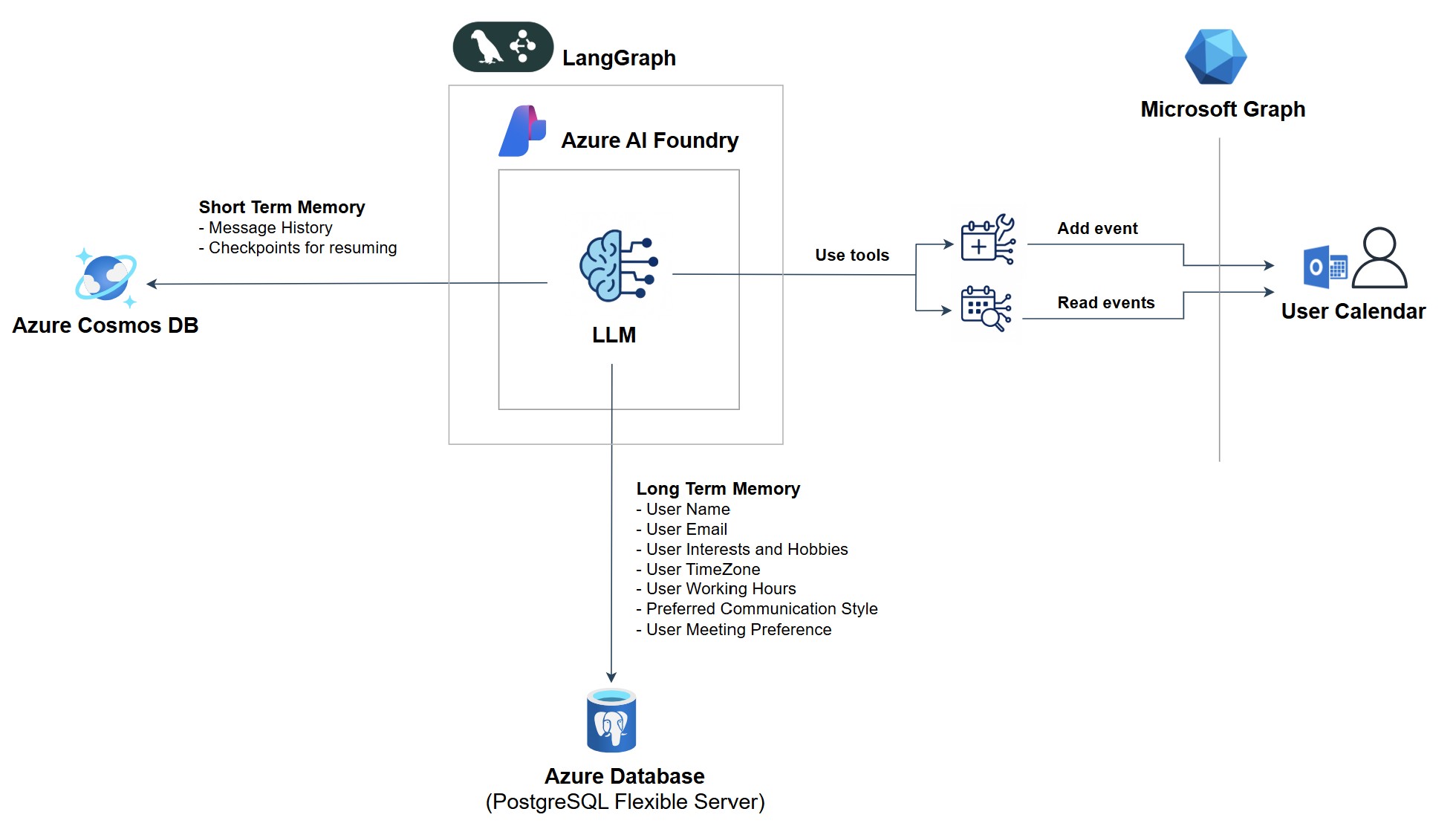

Following diagram illustrates the LangGraph workflow, showing how the AI assistant processes user input, invokes tools, and manages memory updates.

Prerequisites

As a best practice when experimenting with proof-of-concepts, including this one, it is always safest to set everything up within a fully isolated EntraID tenant and Azure subscription.

To run this proof-of-concept, you will need:

-

🧪 Lab environment with Jupyter - Set up a environment using Jupyter in Docker. This provides an isolated, reproducible workspace for running code and experiments.

-

Example (run in your terminal):

docker run -d \ -e LANGCHAIN_TRACING_V2="true" \ -e LANGSMITH_ENDPOINT="https://api.smith.langchain.com" \ -e LANGCHAIN_API_KEY="<your-langchain-api-key>" \ -e LANGCHAIN_PROJECT="default" \ -e COSMOSDB_ENDPOINT="<your-cosmosdb-endpoint>" \ -e COSMOSDB_KEY="<your-cosmosdb-key>" \ -e AZURE_OPENAI_API_KEY="<your-azure-openai-api-key>" \ -e AZURE_OPENAI_ENDPOINT="<your-azure-openai-endpoint>" \ -e CLIENT_ID="<your-client-id>" \ -e CLIENT_SECRET="<your-client-secret>" \ -e TENANT_ID="<your-tenant-id>" \ -e POSTGRES_HOST="<your-postgres-host>" \ -e POSTGRES_PORT="5432" \ -e POSTGRES_DB="<your-postgres-db>" \ -e POSTGRES_USER="<your-postgres-user>" \ -e POSTGRES_PASSWORD="<your-postgres-password>" \ -p 8888:8888 \ -w /app my-jupyter

Visit

http://127.0.0.1:8888in your browser and use the token provided in the terminal to log in. You will also need Jupyter VS Code extension -

-

🦜 LangGraph/LangSmith Account - Sign up for a LangSmith account and obtain your API key. This is required for LangGraph tracing.

-

🔐 App Registration in EntraID - Register an application in Microsoft Entra ID and grant it API permissions to read and write to user calendar. Please refer to documentation.

-

☁️ Deployed Azure Resources - The following Azure services are deployed and configured on basic level by restricting allowed public IP's:

- Azure AI Foundry for AI model hosting and management.

- Azure Cosmos DB for short-term memory storage.

- Azure Database for PostgreSQL Flexible Server for long-term memory storage.

Memory Strategy Overview

In our architecture, we utilize two types of LangGraph memory:

-

🔄 Short-term memory - Used to store message history and checkpoints, enabling the AI assistant to maintain context within a session.

-

📊 Long-term memory - Dedicated to storing user profile information, allowing the AI assistant to personalize interactions over time.

By leveraging Azure Cosmos DB for short-term memory and Azure Database for PostgreSQL for long-term memory, the system ensures that the graph (conversation with AI) can be resumed from a saved state and that each user profile is preserved even after the application is interrupted.

-

This code compiles the graph for deployment, explicitly defining the checkpointer (for saving conversation state) and the store (for long-term user profile data):

graph = builder.compile(checkpointer=cosmosdb_saver, store=across_thread_memory)

Long-Term Memory with Azure PostgreSQL and Trustcall

For persistent, long-term memory, we use langgraph-checkpoint-postgres to store and manage user profiles in Azure PostgreSQL.

-

Here is how we initialise the long-term memory PostgresStore:

from psycopg import connect from langgraph.store.postgres import PostgresStore import os # ... pg_conn = connect(postgres_connection_string) pg_conn.autocommit = True across_thread_memory = PostgresStore(pg_conn) across_thread_memory.setup()

To keep user profiles up to date through interactions with the AI assistant, we leverage trustcall. Trustcall enables us to apply incremental updates to the user profile using LLM-generated JSON patches as conversations progress.

Short-Term Memory with Cosmos DB

For short-term memory, we use CosmosDB through the langgraph-checkpoint-cosmosdb library.

Azure Cosmos DB is a globally distributed, multi-model database service on Azure, providing low-latency and high-availability storage, this makes it ideal for storing conversational context and state of AI workflows.

-

Here is how we initialize the saver for use as a checkpointer:

from langgraph_checkpoint_cosmosdb import CosmosDBSaver cosmosdb_saver = CosmosDBSaver(database_name="langchaindb", container_name="checkpoints")

Microsoft Graph and Microsoft 365 Integration

This AI personal assistant integrates with Microsoft 365 via the O365 Python library, interfacing with the Microsoft Graph API for secure access to Office 365 services. Authentication uses OAuth 2.0, enabling scoped access without exposing user credentials.

We will utilizes the Office365 Toolkit to interact with the user's calendar, with two primary tools:

-

➕ Creating Events - The

create_calendar_eventtool adds events to the user's default Outlook calendar, handling subject, ISO-formatted start/end times, location, description, attendees, and timezone (from user profile). It parses natural language requests (e.g., "Schedule a 2 PM meeting tomorrow") and creates events, including email invites. -

📅 Retrieving Events - The

get_calendar_eventstool fetches events within a configurable range (default: 14 days ahead), sorting and formatting them with details like times, location, and descriptions. Used for queries like "Show my schedule," it provides context-aware responses. -

Following code initializes an O365 Account object with App registration credentials and tenant details, defines access scopes and authenticates the account to enable secure API interactions.

from O365 import Account scopes = ['User.Read', 'Calendars.Read', 'Calendars.ReadBasic', 'Contacts.Read', 'Calendars.ReadWrite'] account = Account( credentials, tenant_id=TENANT_ID, auth_flow_type='authorization' ) # ... account.authenticate(requested_scopes=scopes) # ...

AI Assistant Agent in Action

Now we will run sections of the Jupyter notebook that you can find in this GitHub repo.

We begin by installing the required Python libraries, authenticating with Microsoft Graph, and establishing connections to both CosmosDB and PostgreSQL in Azure. Finally, we execute the main code to construct and initialise the LangGraph workflow.

Building User Personal Profile Through Conversation

Now that the stage is set, it's time to test our AI Assistant.

-

Let's start by running the following code to introduce ourselves to the AI:

# initiate a fresh chat session config = {"configurable": {"thread_id": "1", "user_id": "1"}} # set up the initial message from user user_msg = {"messages": HumanMessage(content=""" My name is Rafal Hollins I live in London and work as a Cloud Architect. I like gardening. My working hours are between 09:00-17:00. My email address is rafal@something.com. I prefer morning meetings. """)} # execute the graph in streaming mode and display output for chunk in graph.stream(user_msg, config, stream_mode="values"): chunk['messages'][-1].pretty_print()

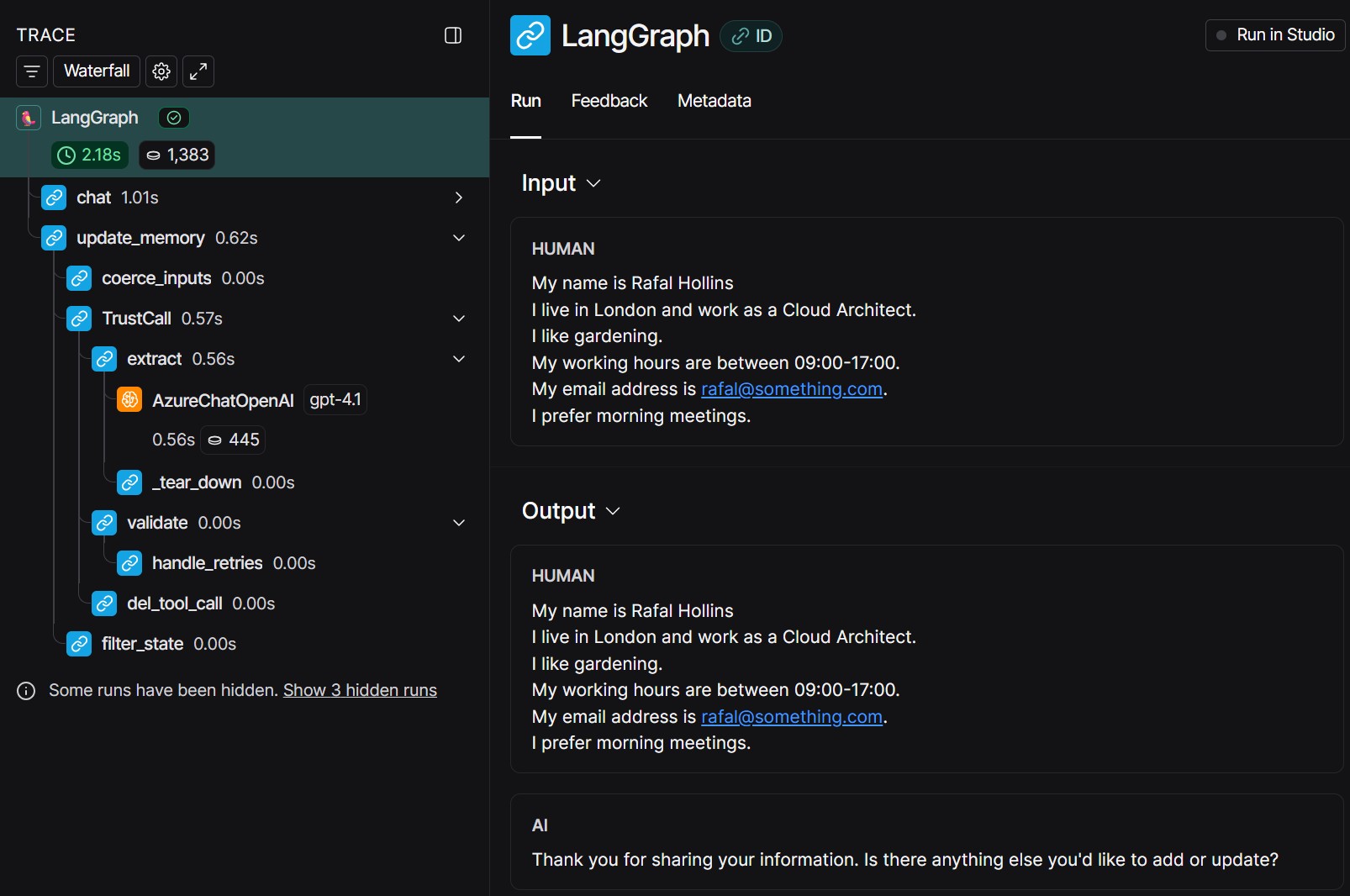

If we go to the LangSmith website and locate our trace, we can see that chat and update_memory LangGraph nodes were invoked and the LLM updates the user profile based on the provided information.

The trustcall will also validate JSON patch update made by LLM and retry if needed.

Let's see what is inside Postgres "langchain" database and what was added by LLM as part our our profile.

-

Execute following TSQL query.

SELECT "value" FROM public.store LIMIT 1000; -

You should see the following output. The LLM generates and formats this profile content on it's own. Later on we will see how it uses this information to fro example schedule events at the appropriate times.

{ "name": "Rafal Hollins", "role": "Cloud Architect", "email": "rafal@something.com", "hobbies": [ "gardening" ], "contacts": [], "timezone": "Europe/London", "working_hours": "09:00-17:00", "meeting_preferences": "morning meetings" }

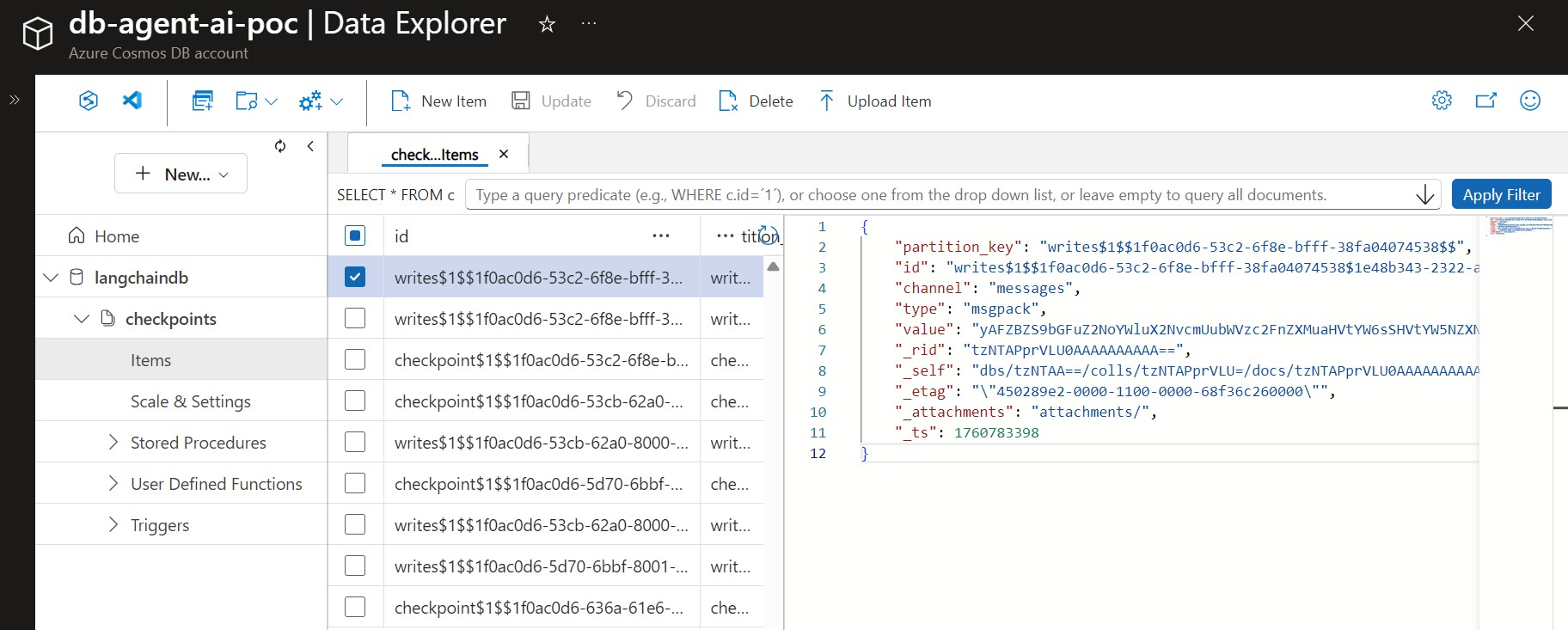

Now let's go to CosmosDB and see how the short-term memory has been stored.

LangGraph uses two collections: checkpoints for saving agent state, and writes for tracking updates. Each entry includes the channel, encoded data, and metadata.

Creating Calendar Events from Conversations

Next we will instruct our AI Assistant to schedule a meeting for us in Outlook calendar.

-

Run the following code in Jupyter notebook.

# initiate a fresh chat session config = {"configurable": {"thread_id": "1", "user_id": "1"}} # set up the initial message from user user_msg = {"messages": HumanMessage(content=""" I need to schedule a meeting with my team and stakeholders next Wednesday between 14:00 and 15:00 to discuss the cloud project called ABC and agree on the next steps. Please also invite john@company.com and agnes@company.com. Add agenda which will include review of business requirements, defining project scope, confirmation of kick-off date and agreement on next steps """)} # execute the graph in streaming mode and display output for chunk in graph.stream(user_msg, config, stream_mode="values"): chunk['messages'][-1].pretty_print()

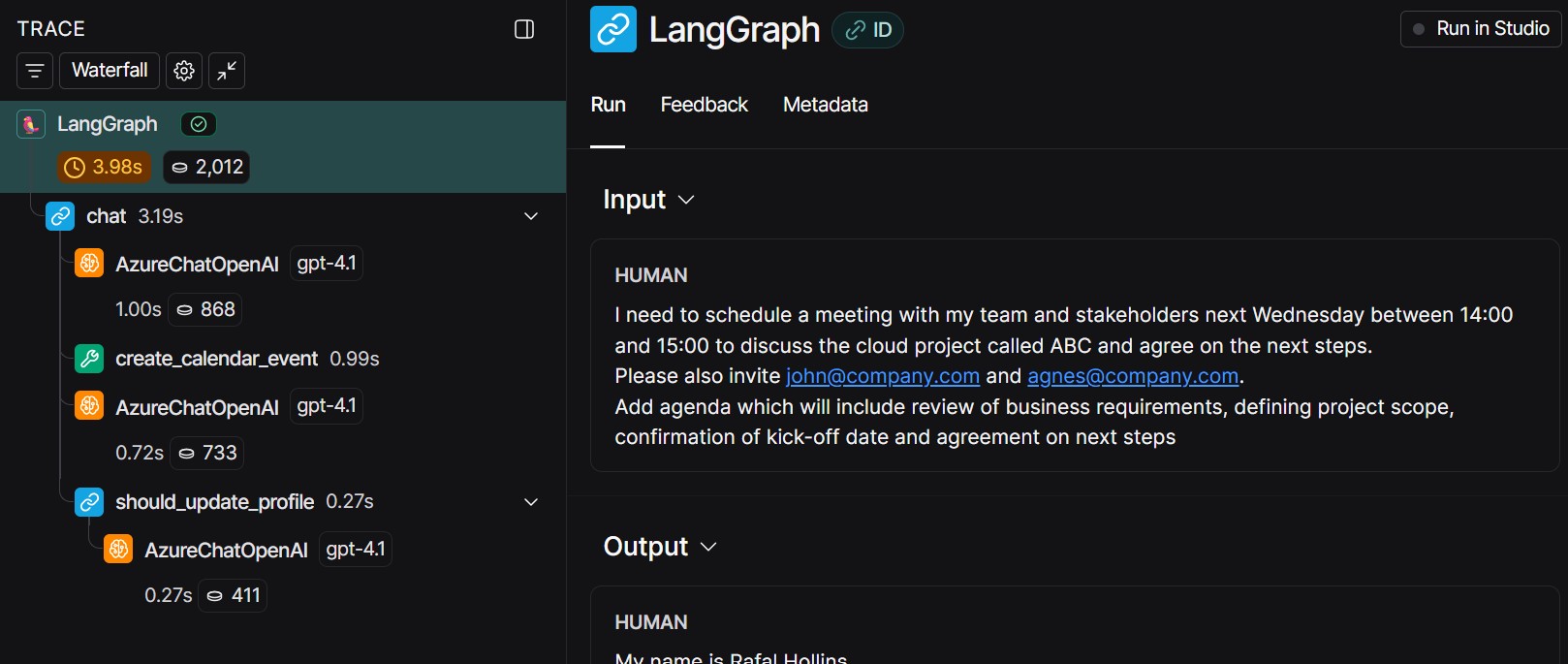

In this case, the chat node recognizes the meeting request and invokes the create_calendar_event tool according to user intent. It also determines that no user profile update is needed.

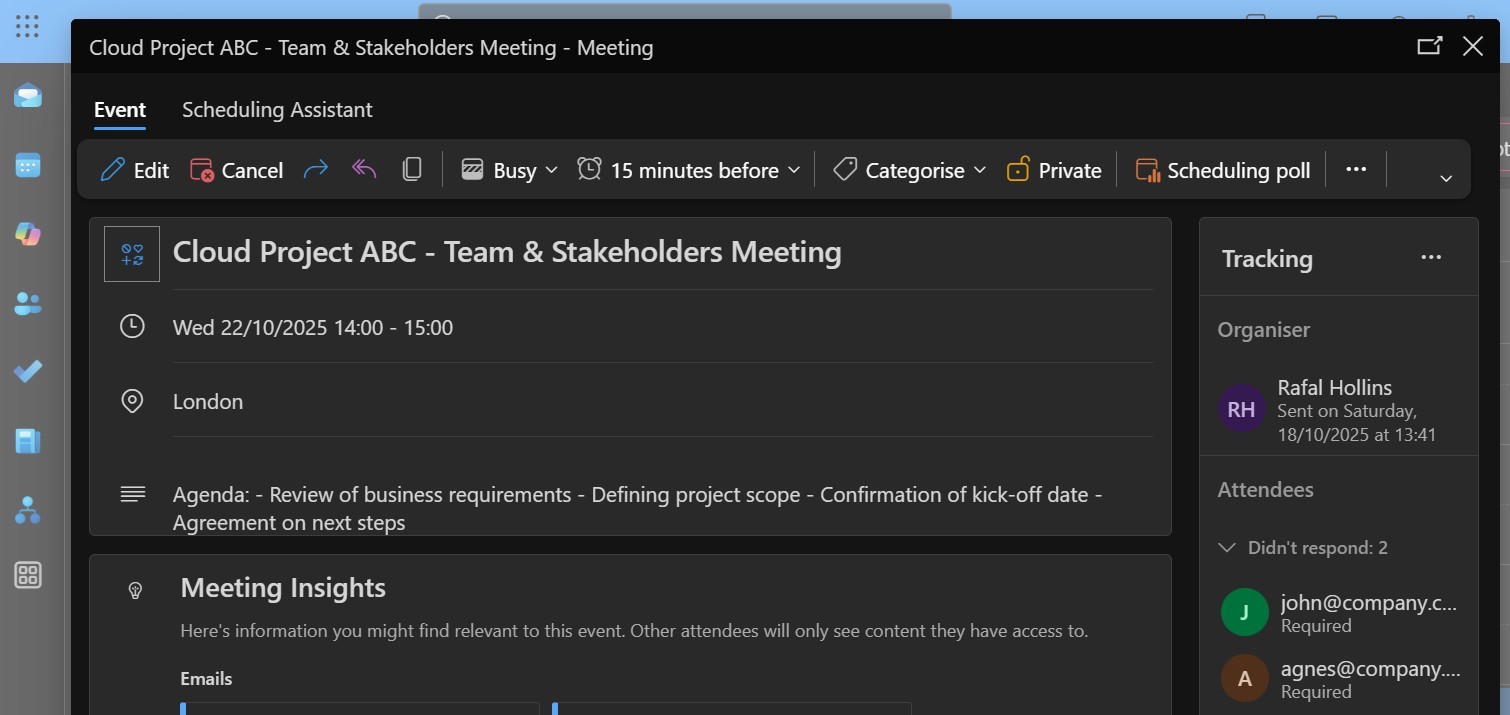

If we now verify the user's Outlook calendar, we can see that the meeting request was created at the correct time according to the user's time zone. The agenda is included, and invitations were sent to all participants.

Final Thoughts

What we haven't covered is LangGraph's native capabilities for hosting enterprise applications, which are worth exploring here.